DNS makes for a deceptively easy service-discovery platform. Various platforms (such as Docker swarm, Amazon Auto-Naming purport to provide a trivial to use service discovery mechanism. DNS is handled in most applications, so has the allure of working with legacy applications with zero integration work. Contrast DNS with purpose-built service discovery mechanisms such as Consul, Zookeeper, and Etcd or Netflix Eureka. These tools require additional effort on the part of developers to integrate, but the result is much more robust. I tested several platforms below as to how they handle DNS round-robin loadbalancing.

DNS falls into the trap of being too easy to use. In this respects, DNS fails the Duck test for service discovery. That is to say, while it walks and talks like a duck, it is not actually a duck. Everyone ‘knows’ DNS — every URL handling library is already capeable of handling DNS, so developers do not have to address discovery, or system integrators do not have to think about how to shim apps together. The trap is a vicious badger masquerading as a duck. While all URL libraries handle DNS, they are not robust enough to replace the behavior of a full-fledged SD such as Consul. (I’ll speak mostly to Consul as I am familiar with it, but this is not to say its competitor are insufficient). The DNS resolution libraries within various platforms differ significantly in behavior, causing sub-optimal application behavior. At small scale this is not an issue — all of them can competently resolve a name to a single address and connect. They differ more when the resolution changes or returns multiple values.

Take for example Java — its poor DNS caching behavior is well documented and still requires configuration changes to even remotely reasonably handle changes in DNS responses at runtime. We’ll look at the current behavior in more detail.

Imagine a client application that has a significant number of backends — 1:100 in the test case used here. Obviously, one backend cannot handle all of the load generated by the frontend, so the ideal behavior is for the traffic to be spread across the backends evenly. This test examined the behavior of several popular business application languages and how they handled DNS resolution.

Integrating a full-fledged SD system allows the developer to get the up-to-the-second state of the environment (such as when performing Consul long-polls), forces them to consider how they want to splay requests across the possible backends, and how to react to changes in the topology. Applications that do not want to perform this level of integration can take advantage of shim mechanisms such as Consul Connect, FabioLB, or external dynamic loadbalancers such as Amazon ALB. While ALBs still utilize DNS, DNS for ALBs changes at a more ‘normal’ DNS pace, and a given node’s capacity is such that it is unlikely a single client can cause an overload.

Test setup

- Single-node Docker Swarm on a CentOS 7.5.1804 VM, running Docker 18.06.0-ce

- 100 Nginx containers each configured to return their own ip address for

/. - Client images, each performing 10,000 requests first naively then with a connection pool.

See the Docker stack definition

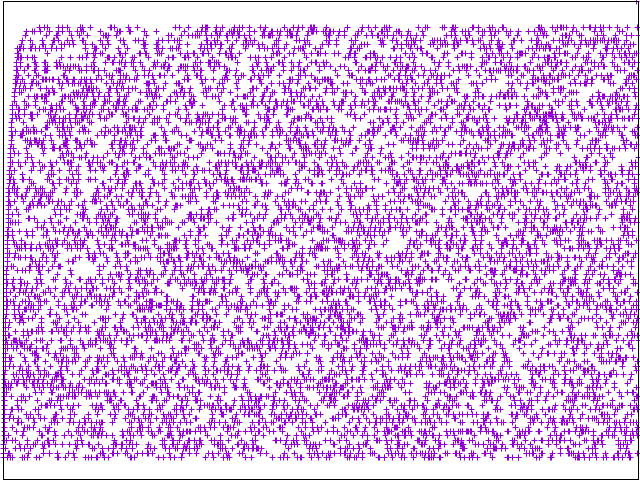

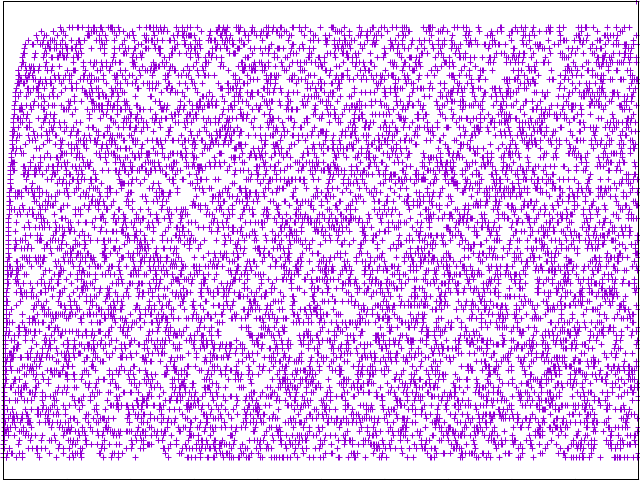

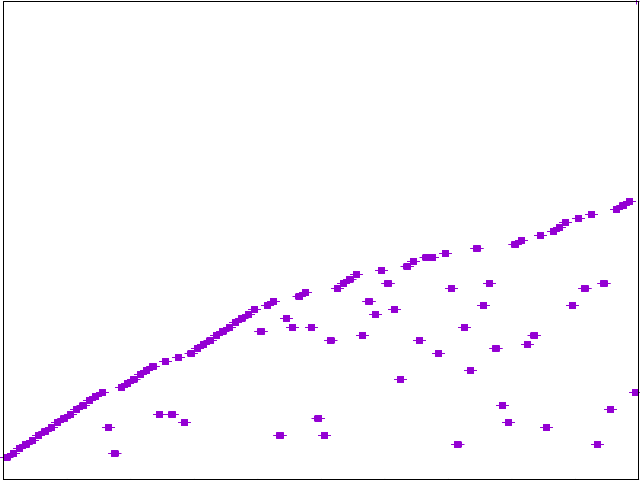

Docker swarm is configured in endpoint_mode: dnsrr mode such that DNS resolution of the nginx service returns 100 A records. Each application first users the generic URL request library to query nginx 10,000 times and outputs the result (the server ip address) each time. The next batch attempts to use the platform specific connection-pool mechanism, as employing persistent connections is a very frequent optimization for http-heavy applications. The results were filtered and the resulting ip addresses run through a ruby script to map them to a x/y array:

The client service logs were filtered into distinct files and transformed with createpoints.rb then passed through gnuplot:

Results

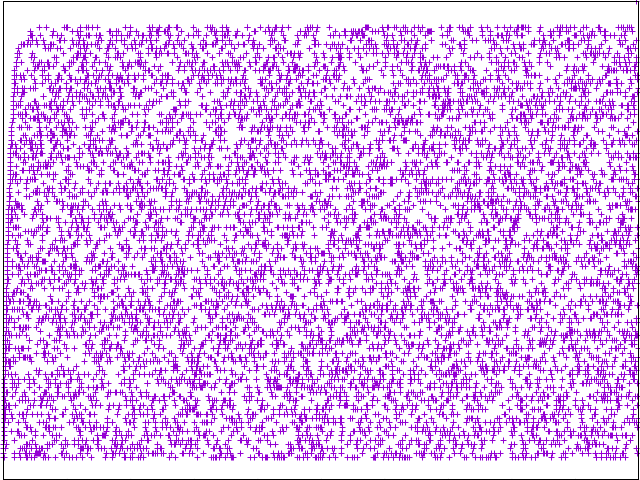

The ideal result is a uniformlly distributed graph such that backend requests are splayed across available nodes.

Java Simple LB:

While not included here, setting the DNS cache to 0 resulted in all requests targeting one backend at a time, rotating every second.

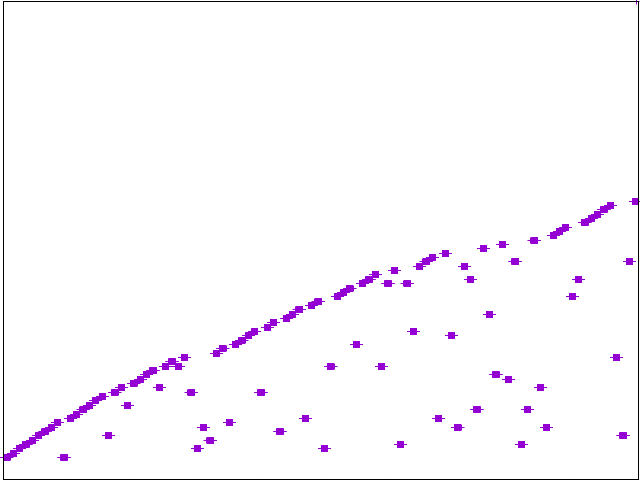

Java Persistent LB:

NodeJS Simple LB:

Great distribution here.

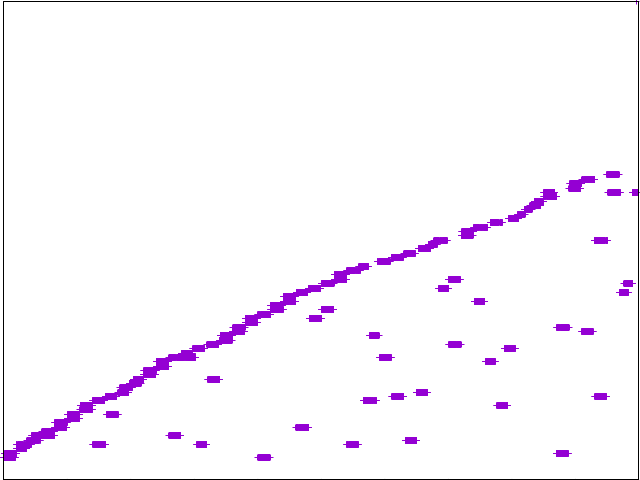

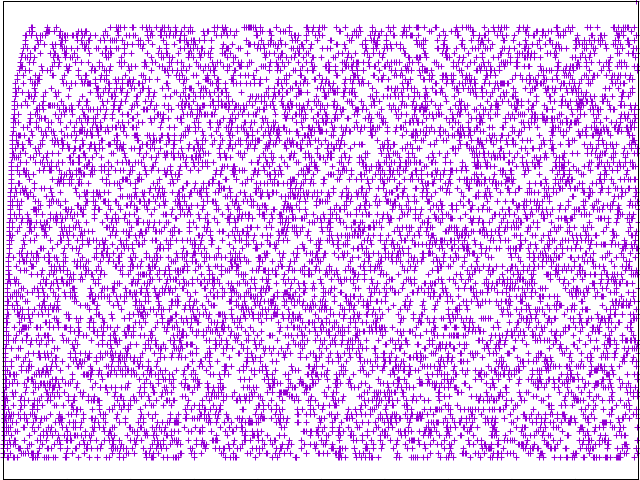

NodeJS Persistent LB:

Node w/connection pooling generally selects one backend for a short period and sends all traffic to it.

PHP Simple LB:

PHP Persistent LB:

Python Simple LB:

Python Persistent LB:

Ruby Simple LB:

Ruby Persistent LB:

Conclusion

Platform DNS behavior varies widely, and behaves poorly in a service-discovery environment unless specific care is taken. DNS is normally not something a developer is concerned with, so in my opinion is likely to be overlooked. A proper loadbalancer or service discovery integration is going to be more successful in the long run and avoid unexpected DNS pitfalls.

If I’ve missed something in how the client apps should behave, please submit a PR to the appropriate application repo and I’ll integrate the changes into this post.